MagicAnimate Reviews:A model framework to transform images to dynamic videos

About MagicAnimate

MagicAnimate from ByteDance Inc team allows you to animate a human image following a given motion sequence.Temporally Consistent Human Image Animation using Diffusion Model

TL;DR: We propose MagicAnimate, a diffusion-based human image animation framework that aims at enhancing temporal consistency, preserving reference image faithfully, and improving animation fidelity.

Just as a joke, it’s nice to see that the dancing girls in the picture at least had blurred faces. ? But it’s a really nice one!Congrats on the launch

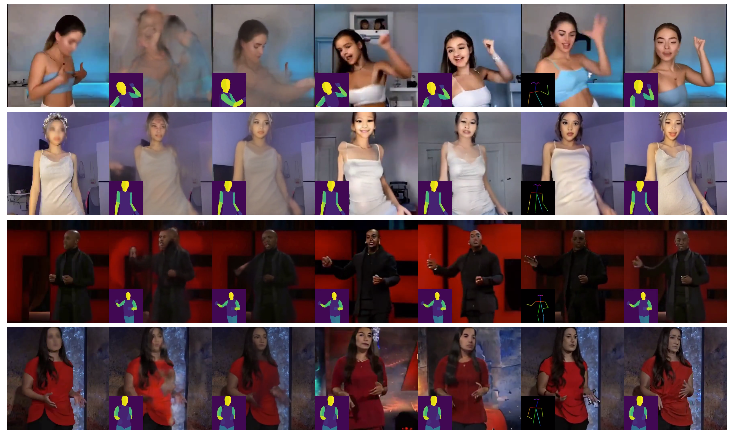

Video Results

▶ Animating Human Image

MagicAnimate aims at animating the reference image adhering to the motion sequences with temporal consistency.

▶ Qualitative Comparisons

Video resutls for comparisons between MagicAnimate and baselines.

▶ Cross-ID Animation

Comprisons between MagicAnimate and SOTA baselines for cross-ID animation, i.e., aniamting reference images using motion sequences from different videos. We show video results for three identities and two motion sequences.

| Motion Sequence 1 | Motion Sequence 2 |

| Reference | MRAA* | DisCo | Ours | MRAA* | DisCo | Ours | ||

Applications

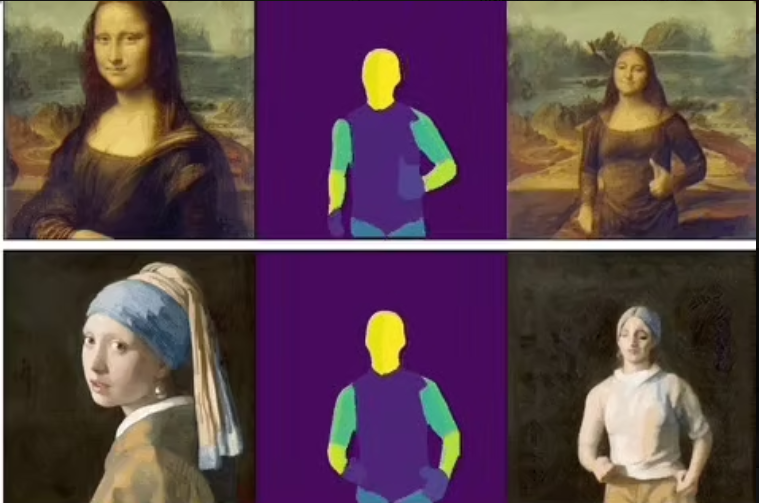

▶ Unseen Domain Animation

Animating unseen domain images such as oil painting and movie character to perform running or doing Yoga.

| Reference | Motion | Animation | Reference | Motion | Animation |

▶ Combining MagicAnimate with T2I Diffusion Model

Animating reference images generated by DALLE3 to perform various actions. Text prompt for each reference image is shown below each row of the video.

| Reference | Motion | Animation | Reference | Motion | Animation |

| “A woman doing yoga in the universe, surrounded by supernova.” |

| “a man standing on top of a mountain, surrounded by ancient remains.” |

| “A woman researcher in the space station.” |

▶ Multi-person Animation

Animating multi-person following the given motion.

| Reference | Motion | Animation |

Pipeline

Given a reference image and the target DensePose motion sequence, MagicAnimate employs a video diffusion model and an appearance encoder for temporal modeling and identity preserving, respectively (left panel). To support long video animation, we devise a simple video fusion strategy that produces smooth video transition during inference (right panel).